Guided RL

Implemented and tested 3 intuitions for expert intervention during student learning.

Overview

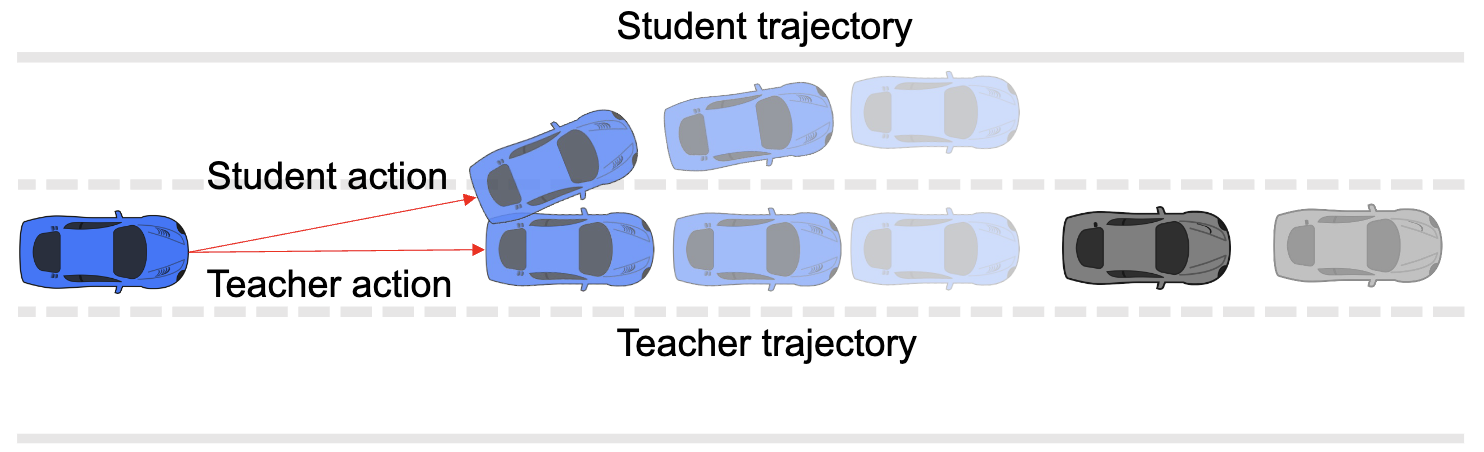

Expert intervention and demonstration is an important scheme to kick-start reinforcement learning to improve learning efficiency as well as exploration safety. Based on the work Guarded Policy Optimization With Imperfect Online Demonstrations [1] illustrated in the figure above, we explored three intervention intuitions herein:

- Confidence-Based Advising: Condition intervention probability on expert's confidence, which is defined as expert action distribution variance \( Var(\pi_t(\cdot \mid s)) \).

- Importance-Based Advising: Condition intervention probability on state importance, defined as relative state-action value range \( \max_a Q(a,s) - \min_a Q(a,s) \).

- Combined Decision Maker: Mix expert and student action distribution weighted by Q-value, to generate a potentially broader exploration.

Results

We compared our modifications with the TS2C method proposed in [1]. The first two variations gain improvement against TS2C, verifying our intuition, while the third variation illustrated a worse performance.

The detailed results can be found here:

[1] Xue, Zhenghai, et al. “Guarded policy optimization with imperfect online demonstrations.” arXiv preprint arXiv:2303.01728 (2023).